EIDOLON / OBELISK

Behavioral Access Control for Deterministic Systems

Technical Overview – Public Release

Prizmatik

24 January 2026

Updated 8 February 2026

Patent Pending. Portions of the systems and methods described herein are the subject of one or more pending patent applications. Publication of this document is not a license and does not waive any rights.

1. Scope and Intent

This document describes a class of access-control mechanism that does not rely on static secrets, transferable keys, or perpetual authorization. It is not a product description, a roadmap, or a commercial proposal.

The system described here exists to explore a specific question:

What does access control look like when possession is no longer a reliable signal of authorization?

Eidolon is a visible proof-of-capability. Obelisk is the underlying primitive.

Development of both will continue independently of external involvement.

2. The Problem Space (Reimagined)

Most contemporary access-control systems reduce, at some layer, to possession-based authorization:

- possession of a key

- possession of a token

- possession of decrypted content in memory

This model fails predictably once:

- content must be rendered to a human

- the execution environment cannot be trusted

- observation itself becomes the attack surface

Attempts to mitigate this failure mode (DRM, secure enclaves, watermarking, legal enforcement) do not address the core issue, but instead merely raise the cost of extraction while preserving the same level of abstraction. It is the abstraction here that is the problem. Once a system grants access because something is held, the system has already lost control over how that access propagates.

3. The Primitive: Behavioral Invocation

Obelisk (Operational Behavioral Envelope for Latent Invocation and Secure Keying) introduces behavioral invocation as an access primitive.

Rather than granting access based on possession, Obelisk gates capability on the successful performance of a behavioral process that cannot be trivially recorded, replayed, or transferred.

Key properties of the primitive emerge from its operation rather than from configuration. Authorization is procedural rather than declarative; validation is time‑bound and embodied; and both failure and duress are non‑differentiable, such that partial success yields no usable output or only policy‑safe artifacts.

The system does not attempt to prevent observation. Instead, it assumes observation and remains resilient under those conditions.

4. Eidolon as an Embodied Expression

Eidolon is a consumer-facing embodied expression of the Obelisk framework.

Rather than exposing the primitive directly, Eidolon renders Obelisk legible through constrained, observable interaction. In this expression, the primitive no longer exists in any traditional form; instead, it is encountered only through behavior. The payload likewise remains unmanifest: held in a classical superposition, collapsing into a specific artifact only upon successful, policy‑conformant invocation. The system’s behavior cannot be inspected; only experienced.

It demonstrates policy enforcement at render time, content that remains inert without successful behavioral validation, and graceful degradation under failed, adversarial, or coerced interaction.

Eidolon is intentionally constrained. It is not the core asset. Eidolon’s purpose is to express the Obelisk framework in a form that can be encountered without making the primitive itself available for extraction.

5. Cantus: Performance-Based Authorization

Cantus is the validation mechanism used in Eidolon.

Rather than authenticating who a user is or what they possess, Cantus evaluates how a user performs a defined interaction.

The interaction is learned rather than stored as a static secret. It incorporates timing, motion, and embodied variance, and is resistant to exact replay even by the performing user.

Importantly:

- Cantus does not identify the user

- Cantus does not persist biometric data

- Cantus does not guarantee uniqueness

The system guarantees presence.

6. Architectural Overview

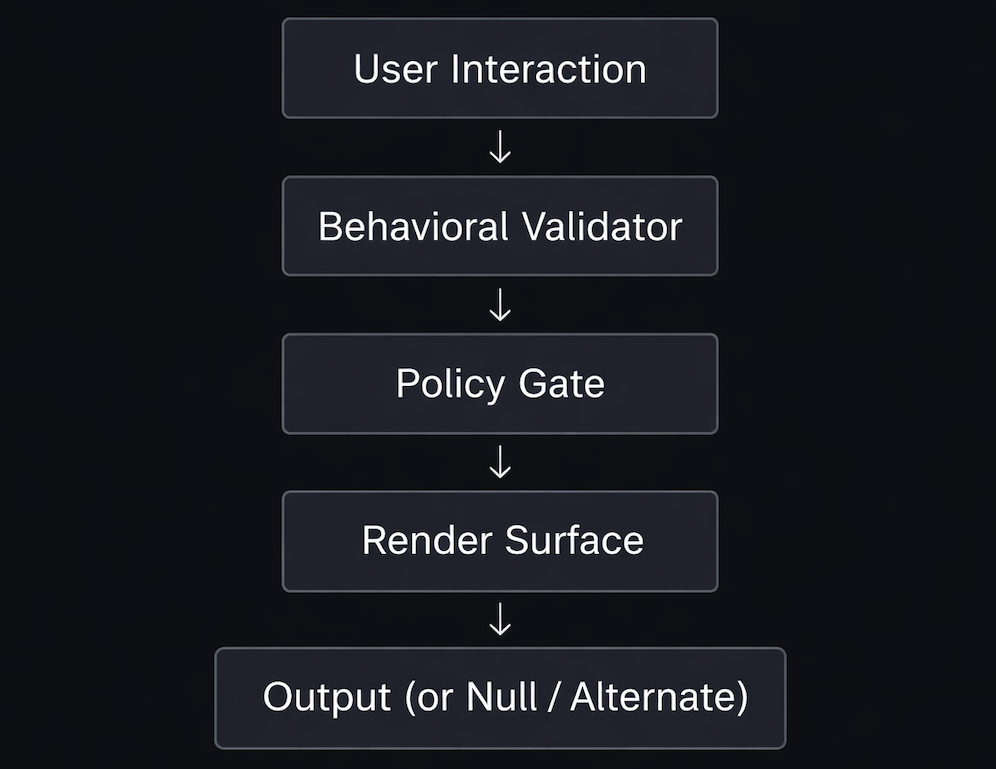

At a high level, the system separates into four concerns:

- Behavioral Validation

Determines whether the invocation process has been successfully performed - Policy Evaluation

Determines what capability is unlocked, if any - Content Rendering

Produces output only within validated execution windows - Failure and Duress Handling

Ensures failed or coerced paths do not leak partial state

Several implementation details are intentionally omitted from this document, but the important property here is boundary placement: no component alone is sufficient to reconstruct protected content.

More importantly, pressure applied to any single boundary does not locally weaken the system. Instead, it redistributes constraint across adjacent boundaries. Attempts to isolate, instrument, or coerce one component increase coupling demands on the others, which serves to narrow the admissible execution envelope rather than widen it. In effect, the system behaves as a passive, self-constricting mechanism: as an adversary presses inward on one surface, the remaining surfaces tighten and redirect risk back toward the attacker.

This redistribution is situational and automatic. The threat model bends in response to context (e.g. observation, replay, coercion, or analysis) without requiring explicit mode switches or alarms. Under cooperative conditions, boundaries relax just enough to permit policy-conformant collapse. Under adversarial or duress conditions, the same boundaries contract, reducing the space in which meaningful artifacts can materialize. The result is not escalation but attenuation: pressure produces less signal, not more.

7. Failure Modes and Tradeoffs

This system does not attempt to solve all access-control problems, though the system is designed to accept and work within those limitations.

Known limitations include:

- It does not prevent screenshots or external capture of rendered output (hire a security guard)

- It does not provide perpetual secrecy once content is meaningfully consumed (nothing can)

- It prioritizes policy enforcement over perfect confidentiality

The system’s value lies in:

- enforcing when access occurs

- enforcing how access occurs

- ensuring access cannot be silently delegated

In environments where those constraints matter, possession-based systems fail both categorically and spectacularly.

8. Duress as a First-Class Condition

Most access-control systems implicitly assume a cooperative user.

They fail poorly when that assumption breaks.

Obelisk explicitly models duress as a distinct operational condition rather than an edge case. Duress is defined broadly to include:

- coercion by another human

- compelled access under threat (legal, physical, or social)

- automation or proxy-driven invocation

- user behavior inconsistent with prior embodied patterns

Under duress, traditional systems behave too correctly.

They grant access precisely when access should be most suspect.

In contrast, Obelisk treats duress as a signal, not a failure.

When duress is detected or inferred:

- validation may succeed but unlock a constrained or alternate policy

- output may be intentionally degraded, delayed, or substituted

- the system may emit plausible but non-sensitive content

- the invocation may complete without producing the protected artifact at all

Importantly, duress handling is:

- non-announceable — the system does not signal that duress has been detected

- non-interactive — the user is not asked to confirm or deny coercion

- non-binary — responses exist along a policy-defined spectrum

This enables outcomes where:

- a coerced user can comply without betraying protected content

- an adversary cannot reliably distinguish success from containment

- the system remains behaviorally consistent under observation

Duress, in this model, is not an override.

It is an alternate execution path.

9. Threat Model, Trust Boundaries, and Resilience Assumptions

The Obelisk framework is designed under the assumption that sufficiently motivated adversaries may possess virtually unlimited computational, financial, and analytical resources, including access to advanced automation and emerging compute paradigms.

Accordingly, the system does not rely on computational infeasibility alone for its security posture.

Adversarial Assumptions

The primary threat model includes adversaries capable of:

- full observation of system behavior at runtime

- coercion or compulsion of legitimate users (including legal, physical, or social pressure)

- large-scale simulation, replay, and optimization of input behaviors

- post hoc inspection of execution environments and artifacts

- application of advanced analytical techniques, including those that may benefit from future quantum acceleration

Quantum-Resilient Posture

Obelisk makes no assumption about the long-term exclusivity of classical cryptographic hardness. Where cryptographic primitives are employed, they are treated as supporting components, not as the sole line of defense.

The system’s core resilience derives instead from:

- behaviorally gated invocation rather than static secrets

- non-differentiable failure and duress paths

- policy-dependent collapse of payloads from latent states

In this sense, Obelisk is resilient not because it is unbreakable, but because there is no stable object to attack.

Avalanche and Non-Convergence Behavior

Small perturbations in invocation behavior (whether intentional or adversarial) are designed to produce disproportionate divergence in outcomes.

Repeated failed attempts do not converge toward success. Instead:

- unsuccessful invocations yield null or policy-safe artifacts

- duress-inferred invocations may yield plausible but non-sensitive outcomes

- iterative probing does not expose gradients useful for optimization

This avalanche behavior ensures that even adversaries with extensive resources cannot reliably refine attacks through trial and error.

Explicit Non-Goals

The system does not attempt to:

- prevent capture of fully rendered output

- maintain secrecy after meaningful human consumption

- defend against adversaries with permanent, unrestricted control over both user and execution environment

Obelisk aims to make silent, delegable, and coerced extraction untenable.

10. Why This Is Difficult to Replicate

At a superficial level, elements of this system resemble DRM, biometric authentication, behavioral biometrics, or secure execution environments. At a deeper level, however, it resembles none of them.

Common failure modes in attempted replication include reintroducing static secrets for convenience, collapsing behavioral validation into identity, or optimizing for user throughput at the cost of enforcement integrity. The design requires resisting several intuitively reasonable shortcuts. Most teams seem to take those shortcuts.

10. Current State and Open Decisions

Several architectural paths remain intentionally open, including:

- the degree of validator portability between devices

- the extent to which policies are user-defined versus system-defined

- which embodiments remain public and which become irrevocable once released

Some of these decisions, once made, are not reversible.

This document is published before those locks close.

11. Closing Notes

This document describes a capability, not a company. No claim is made here about markets, valuation, or scale. The system will continue to evolve according to its own internal logic.